My colleagues and I have been busy developing new products and services. Learn more about what we’ve been up to when we re-launch EvalBlog.com on January 1, 2012. Stay tuned!

From Evaluation 2010 to Evaluator 911

The West Coast Reception hosted by San Francisco Bay Area Evaluators (SFBAE), Southern California Evaluation Association (SCEA), and Claremont Graduate University (CGU) is an AEA Conference tradition and I look forward to it all year long. I never miss it (and as Director of SFBAE, I had better not).

But as I was leaving the hotel to head to the reception my coworker came up to me and whispered, “I am in severe pain—I need to go the hospital right now.” Off we went to the closest emergency room where she was admitted, sedated, and subjected to a mind numbing variety of tests. After some hours of medical mayhem she called me in to her room and said, “The doctor wants me to rest here while we wait for the test results to come back. That could take a couple hours. I’m comfortable and not at any risk, so why don’t you go the reception? It’s only two blocks from here. I’ll call you when we get the test results.”

What a trooper!

So I jogged over to the reception and found that the party was still going strong hours after it was scheduled to close down (that’s a West Coast Reception tradition). Kari Greene, an OPEN member who may be one of the funniest people on the planet, had us all in stitches as she regaled us with stories of evaluations run amok (other people’s, of course). Jane Davidson of Genuine Evaluation fame (pictured below) explained that drinking sangria is simple, making sangria is complicated, but making more sangria after drinking a few glasses was complex. I am not sure what that means, but I saw a lot of heads nodding. The graduate students in evaluation from CGU were embracing the “opportunivore” lifestyle as they filled their stomachs (and their pockets) with shrimp, empanadas, and canapés.

Then my phone rang—my coworker’s tests were clear and the situation resolved. I left the party (still going strong) and took her back to the hotel, at which point she said, “I’m glad you made it to the reception—we can’t break the streak. If you end up in the hospital next year we’ll bring the party to you!”

And that, in a nutshell, is the spirit of the conference—connection, community, and continuity. Well, that and shrimp in your pockets.

Filed under AEA Conference, Evaluation, Program Evaluation

The AEA Conference (So Far)

The AEA conference has been great. I have been very impressed with the presentations that I have attended so far, though I can’t claim to have seen the full breadth of what is on offer as there are roughly 700 presentations in total. Here are a few that impressed me the most. Continue reading

Filed under AEA Conference, Evaluation Quality, Program Evaluation

AEA 2010 Conference Kicks Off in San Antonio

In the opening plenary of the Evaluation 2010 conference, AEA President Leslie Cooksy invited three leaders in the field—Eleanor Chelimsky, Laura Leviton, and Michael Patton– to speak on The Tensions Among Evaluation Perspectives in the Age of Obama: Influences on Evaluation Quality, Thinking and Values. They covered topics ranging from how government should use evaluation information to how Jon Stewart of the Daily Show outed himself as an evaluator during his Rally to Restore Sanity/Fear (“I think you know that the success or failure of a rally is judged by only two criteria; the intellectual coherence of the content, and its correlation to the engagement—I’m just kidding. It’s color and size. We all know it’s color and size.”)

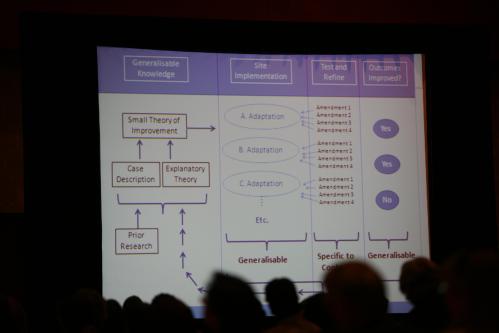

One piece that resonated with me was Laura Leviton’s discussion of how the quality of an evaluation is related to our ability to apply its results to future programs—what is referred to as generalization. She presented a graphic that described a possible process for generalization that seemed right to me; it’s what should happen. But how it happens was not addressed, at least in the short time in which she spoke. It is no small task to gather prior research and evaluation results, translate them into a small theory of improvement (a program theory), and then adapt that theory to fit specific contexts, values, and resources. Who should be doing that work? What are the features that might make it more effective?

Stewart Donaldson and I recently co-authored a paper on that topic that will appear in New Directions for Evaluation in 2011. We argue that stakeholders are and should be doing this work, and we explore how the logic underlying traditional notions of external validity—considered by some to be outdated—can be built upon to create a relatively simple, collaborative process for predicting the future results of programs. The paper is a small step toward raising the discussion of external validity (how we judge whether a program will work in the future) to the same level as the discussion of internal validity (how we judge whether a program worked in the past), while trying to avoid the rancor that has been associated with the latter.

More from the conference later.

Filed under AEA Conference, Evaluation Quality, Gargani News, Program Evaluation

Good versus Eval

After another blogging hiatus, the battle between good and eval continues. Or at least my blog is coming back online as the American Evaluation Association’s Annual Conference in San Antonio (November 10-14) quickly approaches.

I remember that twenty years ago evaluation was widely considered the enemy of good because it took resources away from service delivery. Now evaluation is widely considered an essential part of service delivery, but the debate over what constitutes a good program and a good evaluation continues. I will be joining the fray when I make a presentation as part of a session entitled Improving Evaluation Quality by Improving Program Quality: A Theory-Based/Theory-Driven Perspective (Saturday, November 13, 10:00 AM, Session Number 742). My presentation is entitled The Expanding Profession: Program Evaluators as Program Designers, and I will discuss how program evaluators are increasingly being called upon to help design the programs they evaluate, and why that benefits program staff, stakeholders, and evaluators. Stewart Donaldson is my co presenter (The Relationship between Program Design and Evaluation), and our discussants are Michael Scriven, David Fetterman, and Charles Gasper. If you know these names, you know to expect a “lively” (OK, heated) discussion.

If you are an evaluator in California, Oregon, Washington, New Mexico, Hawaii, any other place west of the Mississippi, or anywhere that is west of anything, be sure to attend the West Coast Evaluators Reception Thursday, November 11, 9:00 pm at the Zuni Grill (223 Losoya Street, San Antonio, TX 78205) co-sponsored by San Francisco Bay Area Evaluators and Claremont Graduate University. It is a conference tradition and a great way to network with colleagues.

More from San Antonio next week!

Filed under Design, Evaluation Quality, Gargani News, Program Design, Program Evaluation

Quality is a Joke

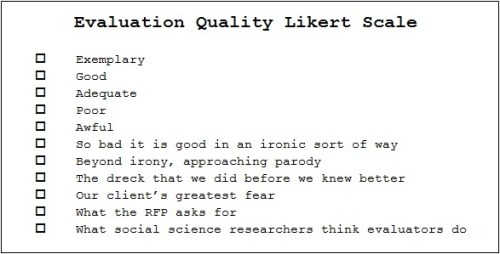

If you have been following my blog (Who hasn’t?), you know that I am writing on the topic of evaluation quality, the theme of the 2010 annual conference of the American Evaluation Association taking place November 10-13. It is a serious subject. Really.

But here is a joke, though perhaps only the evaluarati (you know who you are) will find it amusing.

- A quantitative evaluator, a qualitative evaluator, and a normal person are waiting for a bus. The normal person suddenly shouts, “Watch out, the bus is out of control and heading right for us! We will surely be killed!”

Without looking up from his newspaper, the quantitative evaluator calmly responds, “That is an awfully strong causal claim you are making. There is anecdotal evidence to suggest that buses can kill people, but the research does not bear it out. People ride buses all the time and they are rarely killed by them. The correlation between riding buses and being killed by them is very nearly zero. I defy you to produce any credible evidence that buses pose a significant danger. It would really be an extraordinary thing if we were killed by a bus. I wouldn’t worry.”

Dismayed, the normal person starts gesticulating and shouting, “But there is a bus! A particular bus! That bus! And it is heading directly toward some particular people! Us! And I am quite certain that it will hit us, and if it hits us it will undoubtedly kill us!”

At this point the qualitative evaluator, who was observing this exchange from a safe distance, interjects, “What exactly do you mean by bus? After all, we all construct our own understanding of that very fluid concept. For some, the bus is a mere machine, for others it is what connects them to their work, their school, the ones they love. I mean, have you ever sat down and really considered the bus-ness of it all? It is quite immense, I assure you. I hope I am not being too forward, but may I be a critical friend for just a moment? I don’t think you’ve really thought this whole bus thing out. It would be a pity to go about pushing the sort of simple linear logic that connects something as conceptually complex as a bus to an outcome as one dimensional as death.”

Very dismayed, the normal person runs away screaming, the bus collides with the quantitative and qualitative evaluators, and it kills both instantly.

Very, very dismayed, the normal person begins pleading with a bystander, “I told them the bus would kill them. The bus did kill them. I feel awful.”

To which the bystander replies, “Tut tut, my good man. I am a statistician and I can tell you for a fact that with a sample size of 2 and no proper control group, how could we possibly conclude that it was the bus that did them in?”

To the extent that this is funny (I find it hilarious, but I am afraid that I may share Sir Isaac Newton’s sense of humor) it is because it plays on our stereotypes about the field. Quantitative evaluators are branded as aloof, overly logical, obsessed with causality, and too concerned with general rather than local knowledge. Qualitative evaluators, on the other hand, are suspect because they are supposedly motivated by social interaction, overly intuitive, obsessed with description, and too concerned with local knowledge. And statisticians are often looked upon as the referees in this cat-and-dog world, charged with setting up and arbitrating the rules by which evaluators in both camps must (or must not) play.

The problem with these stereotypes, like all stereotypes, is that they are inaccurate. Yet we cling to them and make judgments about evaluation quality based upon them. But what if we shift our perspective to that of the (tongue in cheek) normal person? This is not an easy thing to do if, like me, you spend most of your time inside the details of the work and the debates of the profession. Normal people want to do the right thing, feel the need to act quickly to make things right, and hope to be informed by evaluators and others who support their efforts. Sometimes normal people are responsible for programs that operate in particular local contexts, and at others they are responsible for policies that affect virtually everyone. How do we help normal people get what they want and need?

I have been arguing that we should, and when we do we have met one of my three criteria for quality—satisfaction. The key is first to acknowledge that we serve others, and then to do our best to understand their perspective. If we are weighed down by the baggage of professional stereotypes, it can prevent us from choosing well from among all the ways we can meet the needs of others. I suppose that stereotypes can be useful when they help us laugh at ourselves, but if we come to believe them, our practice can become unaccommodatingly narrow and the people we serve—normal people—will soon begin to run away (screaming) from us and the field. That is nothing to laugh at.

Filed under Evaluation, Evaluation Quality, Program Evaluation

What the Hell is Quality?

In Zen and the Art of Motorcycle Maintenance, an exasperated Robert Pirsig famously asked, “What the hell is quality?” and expended a great deal of energy trying to work out an answer. As I find myself considering the meaning of quality evaluation, the theme of the upcoming 2010 Conference of the American Evaluation Association, it feels like déjà vu all over again. There are countless definitions of quality floating about (for a short list see Garvin, (1984)), but arguably few if any examples of the concept being applied to modern evaluation practice. So what the hell is quality evaluation? And will I need to work out an answer for myself?

Luckily there is some agreement out there. Quality is usually thought of as an amalgam of multiple criteria, and quality is judged by comparing the characteristics of an actual product or service to those criteria.

Isn’t this exactly what evaluators are trained to do?

Yes. And judging quality in this way poses some practical problems that will be familiar to evaluators:

Who devises the criteria?

Evaluations serve many often competing interests. Funders, clients, direct stakeholders, and professional peers make the short list. All have something to say about what makes an evaluation high quality, but they do not have equal clout. Some are influential because they have market power (they pay for evaluation services). Others are influential because they have standing in the profession (they are considered experts or thought leaders). And as the table below illustrates, some are influential because they have both (funders) and others lack influence because they have neither (direct stakeholders). More on this in a future blog.

Who makes the comparison?

Quality criteria may be devised by one group and then used by another to judge quality. For example, funders may establish criteria and then hire independent evaluators (professional peers) who use the criteria to judge the quality of evaluations. This is what happens when evaluation proposals are reviewed and ongoing evaluations are monitored. More on this in a future blog.

How is the comparison made?

Comparisons can be made in any number of ways, but (imperfectly) we can lump them into two approaches—the explicit, cerebral, and systemic approach, and the implicit, intuitive, and inconsistent approach. Individuals tend to judge quality in the latter fashion. It is not a bad way to go about things, especially when considering everyday purchases (a pair of sneakers or a tuna fish sandwich). When considering evaluation, however, it would seem best to judge quality in the former fashion. But is it? More on this in a future blog.

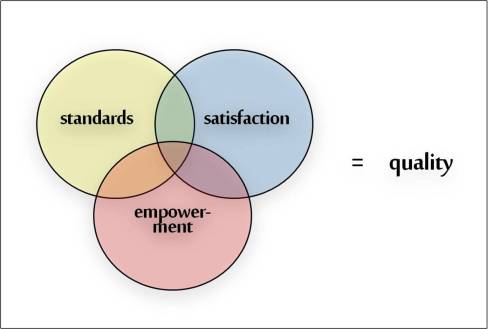

So what the hell is quality? This is where I propose an answer that I hope is simple yet covers most of the relevant issues facing our profession. Quality evaluation is comprised of three distinct things—all important separately, but only in combination reflecting quality. They are:

Standards

When the criteria used to judge quality come from those with professional standing, the criteria describe an evaluation that meets professional standards. Standards focus on technical and nontechnical attributes of an evaluation that are under the direct control of the evaluator. Perhaps the two best examples of this are the Program Evaluation Standards and the Program Evaluations Metaevaluation Checklist.

Satisfaction

When the criteria used to judge quality come from those with market power, the criteria describe an evaluation that would satisfy paying customers. Satisfaction focuses on whether expectations—reasonable or unreasonable, documented in a contract or not—are met by the evaluator. Collectively, these expectations define the demand for evaluation in the marketplace.

Empowerment

When the criteria used to judge quality come from direct stakeholders with neither professional standing nor market power, the criteria change the power dynamic of the evaluation. Empowerment evaluation and participatory evaluation are perhaps the two best examples of evaluation approaches that look to those served by programs to help define a quality evaluation.

Standards, satisfaction, and empowerment are related, but they are not interchangeable. One can be dissatisfied with an evaluation that exceeds professional standards, or empowered by an evaluation with which funders were not satisfied. I will argue that the quality of an evaluation should be measured against all three sets of criteria. Is that feasible? Desirable? That is what I will hash out over the next few weeks.

Filed under Evaluation, Evaluation Quality

The Laws of Evaluation Quality

It has been a while since I blogged, but I was inspired to give it another go by Evaluation 2010, the upcoming annual conference of the American Evaluation Association (November 10-13 in San Antonio, Texas). The conference theme is Evaluation Quality, something I think about constantly. There is a great deal packed into those two words, and my blog will be dedicated to unpacking them as we lead up to the November AEA conference. To kick off that effort, I present a few lighthearted “Laws of Evaluation Quality” that I have stumbled upon over the years. They poke fun at many of the serious issues I will consider in the upcoming months and that make ensuring the quality of an evaluation a challenge. Enjoy.

Stakeholder’s First Law of Evaluation Quality

The quality of an evaluation is directly proportional to the number of positive findings it contains.

Corollary to Stakeholder’s First Law

A program evaluation is an evaluation that supports my program

The Converse to Stakeholder’s First Law

The number of flaws in an evaluation’s research design increases without limit with the number of null or negative findings it contains.

Corollary to the Converse of Stakeholder’s First Law

Everyone is a methodologist when their dreams are crushed.

Academic’s First Law of Evaluation Quality

Evaluations are done well if and only if they cite my work.

Corollary to Academic’s First Law

My evaluations are always done well.

Academic’s Lemma

The ideal ratio of publications to evaluations is undefined.

Student’s First Law of Evaluation Quality

The quality of any given evaluation is wholly dependent on who is teaching the class.

Student’s Razor

Evaluation theories should not be multiplied beyond necessity.

Student’s Reality

Evaluation theories will be multiplied far beyond necessity in every written paper, graduate seminar, evaluation practicum, and evening of drinking.

Evaluator’s Conjecture

The quality of any evaluation is perfectly predicted by the brevity of the client’s initial description of the program.

Evaluator’s Paradox

The longer it takes a grant writer to contact an evaluator, the more closely the proposed evaluation approaches a work fiction and the more likely it will be funded.

Evaluator’s Order Statistic

Evaluation is always the last item on the meeting agenda unless you are being fired.

Funder’s Principle of Same Boated-ness

During the proposal process, the quality of a program is suspect. Upon acceptance, it is evidence of the funder’s social impact.

Corollary to Funder’s Principle

Good evaluations don’t rock the boat.

Funder’s Paradox

When funders request an evaluation that is rigorous, sophisticated, or scientific, they are less likely to read it yet more likely to believe it—regardless of its actual quality.

Filed under Evaluation, Evaluation Quality

Fruitility (or Why Evaluations Showing “No Effects” Are a Good Thing)

The mythical character Sisyphus was punished by the gods for his cleverness. As mythological crimes go, cleverness hardly rates and his punishment was lenient — all he had to do was place a large boulder on top of a hill and then he could be on his way.

The first time Sisyphus rolled the boulder to the hilltop I imagine he was intrigued as he watched it roll back down on its own. Clever Sisyphus confidently tried again, but the gods, intent on condemning him to an eternity of mindless labor, had used their magic to ensure that the rock always rolled back down.

Could there be a better way to punish the clever?

Perhaps not. Nonetheless, my money is on Sisyphus because sometimes the only way to get it right is to get it wrong. A lot.

This is the principle of fruitful futility, or as I call it fruitility. Continue reading

Filed under Commentary, Evaluation, Program Evaluation, Research

Santa Cause

I’ve been reflecting on the past year. What sticks in my mind is how fortunate I am to spend my days working with people who have a cause. Some promote their causes narrowly, for example, by ensuring that education better serves a group of children or that healthcare is available to the poorest families in a region. Others pursue causes more broadly, advocating for human rights and social justice. In the past, both might have been labeled impractical dreamers, utopian malcontents, or, worse, risks to national security. Yet today they are respected professionals, envied even by those who have achieved great success in more traditional, profit-motivated endeavors. That’s truly progress.

I also spend a great deal of time buried in the technical details of evaluation—designing research, developing tests and surveys, collecting data, and performing statistical analysis—so I sometimes lose sight of the spirit that animates the causes I serve. However, it isn’t long before I’m led back to the professionals who, even after almost 20 years, continue to inspire me. I can’t wait to spend another year working with them.

The next year promises to be more inspiring than ever, and I look forward to sharing my work, my thoughts, and the occasional laugh with all of you in the new year.

Best wishes to all.

John

1 Comment

Filed under Commentary, Evaluation, Gargani News, Program Evaluation

Tagged as evaluation, evaluations, Program Evaluation