In Zen and the Art of Motorcycle Maintenance, an exasperated Robert Pirsig famously asked, “What the hell is quality?” and expended a great deal of energy trying to work out an answer. As I find myself considering the meaning of quality evaluation, the theme of the upcoming 2010 Conference of the American Evaluation Association, it feels like déjà vu all over again. There are countless definitions of quality floating about (for a short list see Garvin, (1984)), but arguably few if any examples of the concept being applied to modern evaluation practice. So what the hell is quality evaluation? And will I need to work out an answer for myself?

Luckily there is some agreement out there. Quality is usually thought of as an amalgam of multiple criteria, and quality is judged by comparing the characteristics of an actual product or service to those criteria.

Isn’t this exactly what evaluators are trained to do?

Yes. And judging quality in this way poses some practical problems that will be familiar to evaluators:

Who devises the criteria?

Evaluations serve many often competing interests. Funders, clients, direct stakeholders, and professional peers make the short list. All have something to say about what makes an evaluation high quality, but they do not have equal clout. Some are influential because they have market power (they pay for evaluation services). Others are influential because they have standing in the profession (they are considered experts or thought leaders). And as the table below illustrates, some are influential because they have both (funders) and others lack influence because they have neither (direct stakeholders). More on this in a future blog.

Who makes the comparison?

Quality criteria may be devised by one group and then used by another to judge quality. For example, funders may establish criteria and then hire independent evaluators (professional peers) who use the criteria to judge the quality of evaluations. This is what happens when evaluation proposals are reviewed and ongoing evaluations are monitored. More on this in a future blog.

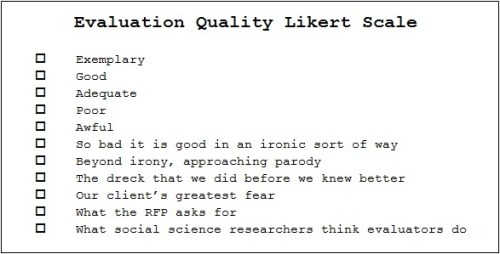

How is the comparison made?

Comparisons can be made in any number of ways, but (imperfectly) we can lump them into two approaches—the explicit, cerebral, and systemic approach, and the implicit, intuitive, and inconsistent approach. Individuals tend to judge quality in the latter fashion. It is not a bad way to go about things, especially when considering everyday purchases (a pair of sneakers or a tuna fish sandwich). When considering evaluation, however, it would seem best to judge quality in the former fashion. But is it? More on this in a future blog.

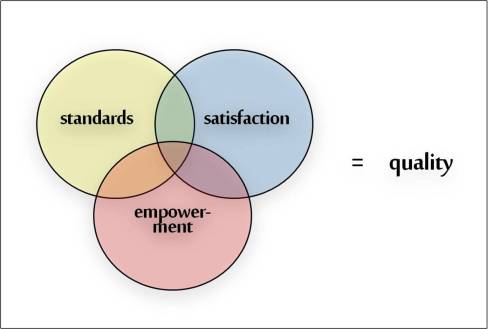

So what the hell is quality? This is where I propose an answer that I hope is simple yet covers most of the relevant issues facing our profession. Quality evaluation is comprised of three distinct things—all important separately, but only in combination reflecting quality. They are:

Standards

When the criteria used to judge quality come from those with professional standing, the criteria describe an evaluation that meets professional standards. Standards focus on technical and nontechnical attributes of an evaluation that are under the direct control of the evaluator. Perhaps the two best examples of this are the Program Evaluation Standards and the Program Evaluations Metaevaluation Checklist.

Satisfaction

When the criteria used to judge quality come from those with market power, the criteria describe an evaluation that would satisfy paying customers. Satisfaction focuses on whether expectations—reasonable or unreasonable, documented in a contract or not—are met by the evaluator. Collectively, these expectations define the demand for evaluation in the marketplace.

Empowerment

When the criteria used to judge quality come from direct stakeholders with neither professional standing nor market power, the criteria change the power dynamic of the evaluation. Empowerment evaluation and participatory evaluation are perhaps the two best examples of evaluation approaches that look to those served by programs to help define a quality evaluation.

Standards, satisfaction, and empowerment are related, but they are not interchangeable. One can be dissatisfied with an evaluation that exceeds professional standards, or empowered by an evaluation with which funders were not satisfied. I will argue that the quality of an evaluation should be measured against all three sets of criteria. Is that feasible? Desirable? That is what I will hash out over the next few weeks.

Fruitility (or Why Evaluations Showing “No Effects” Are a Good Thing)

The mythical character Sisyphus was punished by the gods for his cleverness. As mythological crimes go, cleverness hardly rates and his punishment was lenient — all he had to do was place a large boulder on top of a hill and then he could be on his way.

The first time Sisyphus rolled the boulder to the hilltop I imagine he was intrigued as he watched it roll back down on its own. Clever Sisyphus confidently tried again, but the gods, intent on condemning him to an eternity of mindless labor, had used their magic to ensure that the rock always rolled back down.

Could there be a better way to punish the clever?

Perhaps not. Nonetheless, my money is on Sisyphus because sometimes the only way to get it right is to get it wrong. A lot.

This is the principle of fruitful futility, or as I call it fruitility. Continue reading →

Leave a comment

Filed under Commentary, Evaluation, Program Evaluation, Research

Tagged as fruitility, institute for education sciences, no effects, randomized trials, Sisyphus